- Modern AI is based on optimization algorithms that automate tasks, analyze large volumes of data, and enable new business models.

- The main risks include algorithmic bias, job loss, privacy breaches, information manipulation, and more sophisticated cyberattacks.

- Generative AI adds specific challenges: hallucinations, deepfakes, technological dependence, rising costs, and intellectual property and reputation issues.

- Strong governance, clear regulatory frameworks, and the use of AI to manage risks are key to harnessing its potential without losing control of its impact.

La combining artificial intelligence with all aspects of our lives It's happening much faster than most organizations and individuals could have imagined. From the first recommendation algorithms, we've moved in record time to generative models capable of writing reports, analyzing contracts, creating hyper-realistic images, and making automated decisions in critical business processes.

This accelerated expansion opens up a huge range of possibilities, but also It brings with it risks, ethical dilemmas, and regulatory challenges. These are issues that cannot be ignored. It's not about choosing between an apocalyptic vision or naive techno-optimism, but about calmly understanding what current AI actually does, what it doesn't do, where it adds the most value, and where it can become a serious problem if not managed wisely.

What do we understand today by artificial intelligence?

When we talk about AI in everyday life, we're actually referring to a set of optimization algorithms and statistical models trained on large volumes of dataThey are not conscious machines or "brains" that think like a person, but systems that learn patterns and generate outputs that are useful (or plausible) for very specific tasks.

In the business world, AI has become popular because It allows you to automate routine tasks, analyze huge databases, and support decision-making. with a precision and speed unattainable by a human team. From assisted medical diagnosis to the early detection of financial fraud, use cases are multiplying across all sectors.

It is important to differentiate, however, between the so-called Restricted Artificial Intelligence (the one that solves specific problems: classifying images, translating texts, recommending content…) and the hypothetical General Artificial Intelligencewhich would aspire to reason about any task like a human being. Currently, what we use on a massive scale are restricted systems, however impressive models like ChatGPT, Bard, or DALL-E may seem.

These models, especially language models, are designed to calculate the most likely and socially acceptable response Given an input, not to understand the world or have their own goals. They mimic reasoning, but under the hood it's sophisticated statistical calculation, not consciousness or intention.

How AI works: key techniques

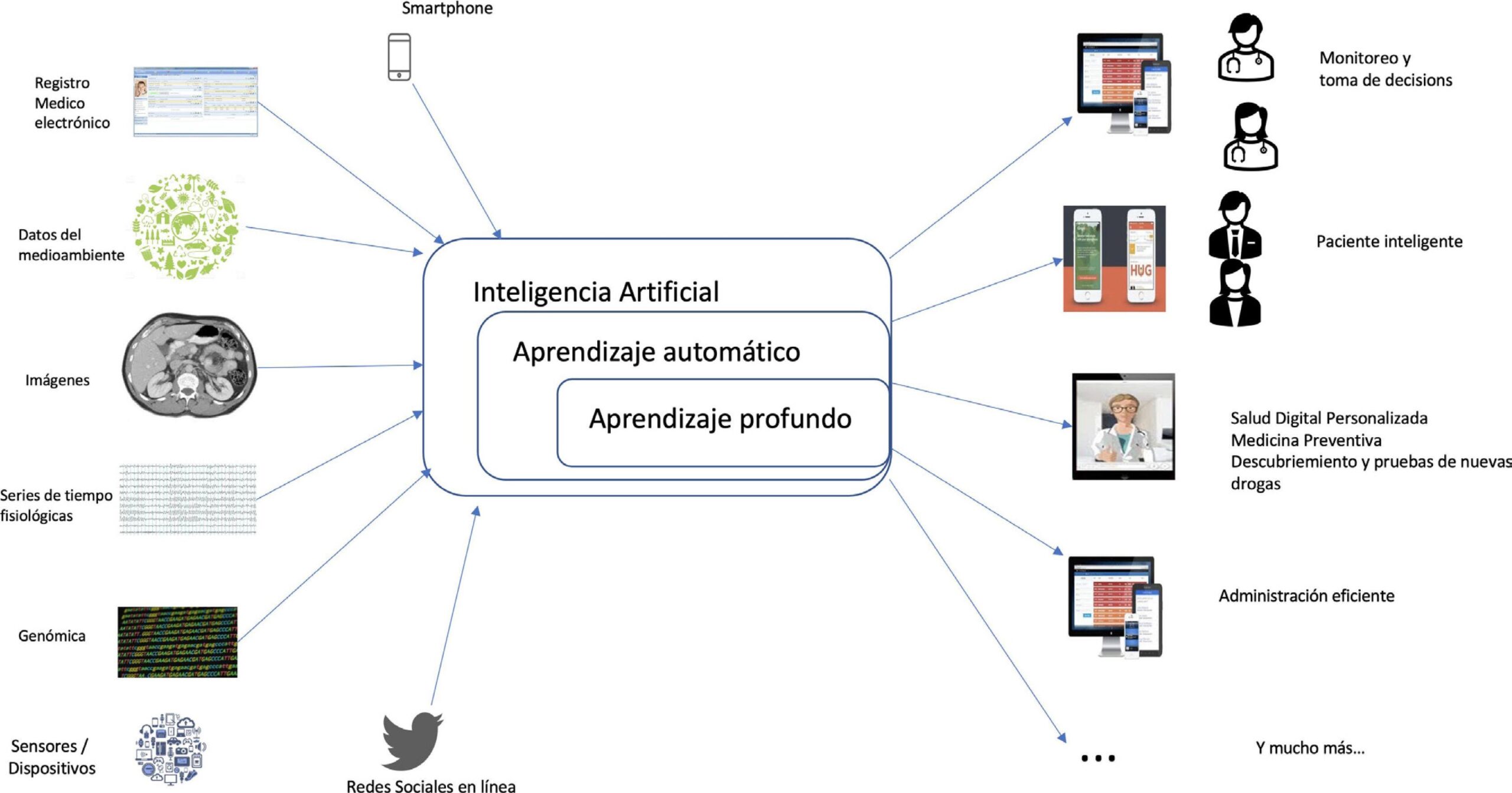

Most modern AI applications rely on three major technological building blocks: machine learning, deep learning, and natural language processingto which is added computer vision for everything related to images and video.

Machine learning or automatic learning

Machine learning (ML) is the branch that focuses on build algorithms capable of learning from datawithout needing to explicitly program each rule. The system finds patterns and, based on them, makes predictions, classifications, or recommendations.

In supervised learning, models are trained with labeled data indicating the correct answer (for example, whether a transaction was fraudulent or not). In unsupervised learning, on the other hand, the algorithm detects hidden structures and groups in unlabeled data, which is very useful for segment customers, detect anomalies, or group behaviors.

A typical example in the industry is the use of ML for analyze real-time data from factory sensors (temperature, vibrations, usage cycles) and anticipate when a machine is going to fail, thus enabling predictive maintenance.

Deep learning

Deep learning is a subset of machine learning that uses multi-layered artificial neural networks to learn increasingly complex representations of data. These networks are inspired by the structure of the brain, although their actual functioning differs considerably from biology.

Thanks to deep learning, applications like voice recognition, advanced computer vision, recommendation systems, or autonomous drivingWith access to enormous datasets and computing power, these networks can detect very subtle relationships that were previously impossible to model.

In sectors such as automotive, for example, deep learning is used to interpreting camera images and radar and lidar data of an autonomous vehicle, estimate distances, predict trajectories and decide on maneuvers almost instantaneously.

Natural Language Processing

Natural language processing (NLP) is concerned with enabling systems to to understand, analyze and generate human languageboth text and voice. This includes tasks such as classifying documents, summarizing texts, translating, answering questions, or holding conversations.

Current large language models (LLMs) are capable of detect syntactic structures and semantic nuances in vast amounts of textThis allows them to produce surprisingly natural responses. They are used in chatbots, virtual assistants, sentiment analysis, customer service, and internal support in companies.

computer vision

Machine vision focuses on enabling machines interpret images and videos with a level of detail similar to that of a personDetecting objects, recognizing faces, reading characters, measuring dimensions, or identifying defects in an industrial part are some examples.

This technology has become a key component in quality control in factories, surveillance systems, medical imaging diagnostics and augmented reality experiences, among many other uses.

Advantages and opportunities of AI

On an economic and social level, AI opens the door to a new wave of innovation in products, services and business modelsIn Europe, for example, it is considered an essential driver for the transformation of sectors such as the green economy, the industrial Technologyagriculture, health, tourism or fashion.

In the business world, one of AI's greatest strengths is... automation of repetitive processes and tedious tasksPhysical robots and intelligent software can handle mechanical operations, incident classification, generation of standard responses, or data extraction, freeing up people's time for creative and strategic tasks.

Another key advantage is the ability to reduce human error in high-repetition or high-precision activitiesFrom detecting micro-defects in parts using infrared cameras to automatically entering data, AI minimizes errors and improves traceability of what happens.

At the same time, intelligent systems contribute remarkable accuracy in the analysis of large volumes of informationThis generates useful indicators for deciding on investments, adjusting prices, sizing staff, or redesigning processes. This analytical capability strengthens the quality of business decisions.

In healthcare, AI is already being used for to support diagnoses based on medical images, design personalized treatments, and accelerate drug discoveryIn banking and finance, it helps to detect fraud, assess credit risks, and automate operations in stock markets.

Public services also benefit: Transport optimization, intelligent waste management, energy saving, personalized education, or more efficient e-government These are clear lines of application. At the same time, analysts point out that responsible use of AI can contribute to strengthening democracy by helping to combat disinformation, detect cyberattacks, and improve transparency in procurement processes.

Generative AI: a new leap in capabilities… and in risks

The emergence of generative AI has marked a turning point, as these systems are capable of create original and believable contentTechnical texts, images, audio, video or code, and practical examples such as How to create WhatsApp stickers with ChatGPT.

For businesses, this opens up the possibility of produce documents, marketing campaigns, reports or prototypes much fasteras well as supporting teams with productivity co-pilots. However, it also poses additional challenges in terms of quality, intellectual property, security, and reputation.

Among the most visible risks is the generation of incorrect information or "hallucinations"The model fabricates data or references that appear convincing but do not correspond to reality. If not properly reviewed, this can lead to erroneous decisions, especially in critical areas such as healthcare, law, or finance.

Added to this is the question of the information security and privacyIf a model is fed with sensitive data (customers, patients, business strategy) without the proper safeguards, there is a risk of leaks, regulatory non-compliance, or improper reuse of that information.

Furthermore, generative AI can foster a excessive technological dependence, with increasing costs associated with the use of large models, and can excessively homogenize content and proposals, reducing differentiation for brands if they all use the same tools without personalizing them.

Cross-cutting risks of artificial intelligence

Beyond the generative aspect, the massive deployment of AI brings with it a set of Structural risks that affect employment, fundamental rights, security and economic stabilityUnderstanding them is essential to being able to manage them.

Job displacement and skills gap

AI-driven automation has an ambiguous effect on employment: It eliminates certain positions, transforms others, and creates new professions.Administrative tasks, highly routine office work, or basic control tasks are especially vulnerable.

Without a clear policy of professional retraining and skills updatingMany people may be left behind in the labor market, widening existing inequalities. In a planned economic system, this transition could be better organized; in current capitalism, it usually translates into instability and precariousness while the productive fabric is adjusted.

Algorithmic biases and discrimination

Algorithms learn from historical data that often They reflect existing prejudices, inequalities, and power structuresIf these biases are not corrected, the systems reproduce and amplify them in hiring processes, loan approvals, insurance management, or even in the judicial system.

We already know of cases of personnel selection models that They systematically penalized women because they had been trained using predominantly male templates, or racially biased criminal risk assessment tools. Mitigating this risk requires independent audits, diverse development teams, and balanced and reviewed training data.

Privacy, surveillance and fundamental rights

AI works better the more data it has, which incentivizes a mass collection of personal informationFacial recognition systems, online tracking, creation of detailed behavioral profiles, or social media analysis can violate privacy and, in the wrong hands, become surveillance tools.

European legislation (including the upcoming AI Act) focuses on limiting high-risk uses, such as mass biometric identification or automated decision-making without the possibility of human interventionEven so, the danger of abuses remains, especially in contexts with less democratic oversight.

Security, cyberattacks and malicious use

AI is a double-edged sword: it can do much to to better prevent, detect and respond to cybersecurity threatsIt can also enhance the capabilities of attackers. Automating phishing campaigns, generating more sophisticated malware, or bypassing detection systems using adversary examples are some of the risks.

In the military and national security sphere, the impact of autonomous weapons, automated defense systems, and AI-supported cyber warfareThe international community is still far from a robust consensus on the ethical and legal limits of these applications.

Information manipulation and deepfakes

With generative AI it is relatively easy to create fake but very believable videos, audios and imagesThese are known as deepfakes. These pieces can be used for extortion, political manipulation, reputational attacks, or mass disinformation campaigns.

At the same time, algorithms that personalize content on social media can enclosing users in echo chambersThis reinforces extreme viewpoints and further polarizes the public sphere. AI thus becomes an amplifier of existing dynamics, with a reach that is difficult to control.

Unpredictability and complexity of systems

As models become more complex and autonomous, Their behavior is becoming less and less transparent, even to their creators.This makes it difficult to explain why a particular decision has been made, which is critical in regulated areas.

If crucial functions (healthcare, infrastructure, justice, transport) are delegated to opaque systems, the risk of systemic failures, cascading effects, and loss of human controlHence the importance of designing explainable models, with traceability and the capacity for manual intervention.

Ethical, regulatory and liability challenges

The rise of AI has raised complex issues: Who is responsible if an algorithm causes harm? How are fairness and transparency guaranteed? What limits should be imposed? Traditional regulations lag behind the pace of innovation, and this creates legal loopholes.

The European Union is promoting an AI Law that classifies applications by risk levels and establishes stricter requirements for high-impact sectors (health, transportation, employment, justice, security). Obligations regarding documentation, auditing, training data management, and human oversight are foreseen.

One particularly delicate issue is the liability in case of damagesIf a self-driving car causes an accident or an automated system wrongfully denies a loan, is the hardware manufacturer, the model developer, the company operating it, or the end user responsible? A system that is too lax can discourage quality; one that is too rigid can stifle innovation.

In parallel, AI ethics demands going beyond formal compliance with the law. Organizations, developers, and regulators must agree on principles of justice, non-discrimination, respect for autonomy and minimization of harmAnd that inevitably requires an informed public debate, involving not only companies and governments, but also citizens and affected groups.

AI governance in organizations: from chaos to a common framework

In many companies, the adoption of AI has started informally: Each department tests its own model or integrates an external service on its own.Marketing uses a text generator, Operations trains an incident classifier, Human Resources experiments with CV screening tools…

This "model-to-model" approach has the advantage of speed, but in the medium term it causes technological fragmentation, duplication of efforts, and lack of controlDozens of isolated solutions emerge, without a common strategy, traceability, or shared cost and value metrics.

The risks are piling up: It is unknown how many models are in production, what data they use, or who maintains them.Decision records are incomplete, hindering internal or regulatory audits. And the cloud services bill keeps growing without anyone having a clear view of the return.

The alternative is to move towards a centralized governance framework that allows continued experimentation, but on a common foundation: model catalogs, data policies, access controls, shared monitoring tools, traceability, and risk assessment. Specialized architectures, such as enterprise AI platforms, seek precisely to combine local agility with global control.

Without this discipline, AI becomes a source of technical debt, legal uncertainty and cost overrunsWith it, however, it becomes another strategic layer, at the level of cybersecurity or data management, capable of providing sustainable competitive advantages.

AI applications in enterprise risk management

Paradoxically, many of the threats associated with AI can be mitigated using AI itself as an ally to manage risks within organizations. In areas such as operational risks, regulatory compliance, anti-money laundering, and information security, it is already being used with good results.

On one hand, algorithms allow analyze large amounts of internal and external data in a very short time, detecting anomalous behavior patterns, worrying trends, or combinations of factors that usually precede relevant incidents.

Also especially valuable are predictive modelsThese tools help anticipate the materialization of certain risks based on historical trends. This allows for planning preventative measures, strengthening controls, or adapting insurance coverage.

In fraud prevention, AI can monitor in real time transactions, system access, and financial movementsidentifying suspicious transactions that escape the human eye. Similarly, in compliance risk management, segmentation algorithms facilitate the classification of clients, products, or jurisdictions according to their exposure profile.

All of this requires, however, quality, well-governed and representative dataWithout a solid informational foundation, models generate false positives, biases, and incorrect decisions. Technology does not replace professional judgment, but rather complements it and makes it more efficient.

In recent years, specific solutions based on generative AI have also emerged that act as copilots for risk managementThese tools help identify, describe, and assess threats based on applicable regulations, the industry, and each company's processes. When integrated into robust platforms with appropriate controls, these assistants significantly increase the productivity of risk teams.

The combination of all the above paints an ambivalent picture: Artificial intelligence has enormous potential to improve how we produce, decide, and live, but it also amplifies inequalities, errors, and conflicts if used without criteria or control.Finding the balance involves investing in training, strengthening regulation, deploying solid governance frameworks, and always keeping people at the center of decisions, using AI as a tool and not as an end in itself.

Table of Contents

- What do we understand today by artificial intelligence?

- How AI works: key techniques

- Advantages and opportunities of AI

- Generative AI: a new leap in capabilities… and in risks

- Cross-cutting risks of artificial intelligence

- Ethical, regulatory and liability challenges

- AI governance in organizations: from chaos to a common framework

- AI applications in enterprise risk management